“True Wit is Nature to advantage dress'd

What oft was thought, but ne'er so well express'd.”

― Alexander Pope

“But in the end, stories are about one person saying to another: This is the way it feels to me. Can you understand what I’m saying? Does it feel this way to you?”

― Kazuo Ishiguro

By now, you’ve likely heard about ChatGPT.* It’s a chatbot developed by San Francisco company OpenAI that can answer your questions, tell jokes, write and debug code — and yes, chat with you. It can even write stories, essays, articles, and poems.

ChatGPT burst on the scene on November 30 last year, and within five days it had 1 million users.

How will ChatGPT change our world?

But more significant than its number of early users is its potential to affect our world.

Since it’s hard to predict the future, no one knows exactly what that will look like — which gives rise, not surprisingly, to plenty of hyperbole: ChatGPT will change the world as we know it, precipitate a seismic shift in how content is created, and lead to mass unemployment. It will wreak havoc. It will make our education system obsolete, replace lawyers, developers, and writers, and kill companies.

Some are already (misguidedly) trying to use it to replace writers:

There’s obviously a lot of room for speculation. Medium has asked its writers to weigh in, and one of them even cooked up a sinister scenario, filled with horror and dread, imagined by the AI itself. A ChatGPT-written article in the Guardian provided a counterpoint, based on a more benign prompt. As with most software, what you get depends on what you put in.

Any big technological advances cause (probably warranted) apprehension, and ChatGPT represents a huge leap in AI that only hints at what the not-too-distant future might bring.

ChatGPT’s limitations

Rather than attempt to predict the future, I decided to take ChatGPT for a spin. For a couple of days I couldn’t even log on, because the system was overloaded with users eager to try it out.

When I did gain access, I breathed a sigh of relief.

ChatGPT is indeed impressive. The AI, especially as it’s refined over time, will undoubtedly yield some amazing applications.

But it can’t replace human writers.

I started by asking ChatGPT to write some articles on topics I’ve covered in this newsletter. The results were refreshingly bland. Here are just a few of the many I attempted:

ChatGPT’s formula seems to be:

Explain the basics.

Give an example or two.

Sum everything up with a paragraph that begins with “Overall, …” (to be fair, some subsequent attempts ended with “So, …” or even “In conclusion, …”).

My examples highlight some of ChatGPT’s limitations. The AI can produce a perfectly respectable 9th-grade essay. Some even claim it can tackle college-level essays (if professors are okay with them all ending with “Overall, … ”).

But even if ChatGPT can do this, which is debatable, it can’t add a human point of view or a real voice. It can’t draw from personal experiences, emotions, or insights. It can’t do what Pope and Ishiguro so eloquently invoke in the quotes at the top of this post.

ChatGPT agrees with me — based on my prompt to write an article titled “Why ChatGPT can’t replace a human writer,” which was definitely leading the witness. But when I tried again with the prompt “Why ChatGPT can replace a human writer,” it still ended up more or less conceding that it can’t quite be done.

I got into a Twitter discussion with someone who claimed that while ChatGPT is “trained to be pretty bland by default,” you can “get pretty spectacular results by fine-tuning your prompt to elicit the right kind of responses.” I asked if he could get it to write a good post on It’s a Wonderful Life as a Hero’s Journey. The result:

In addition to resorting to clichés and platitudes, ChatGPT is limited to providing information. It’s not really up to the task of being creative or engaging in deep thinking.

Fun with ChatGPT

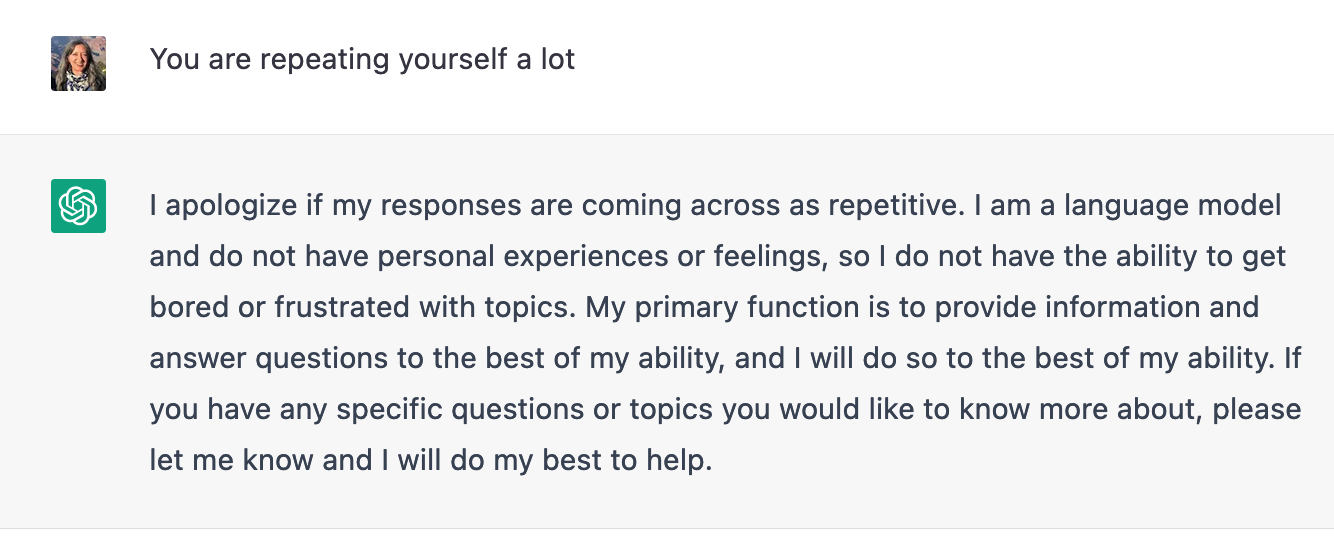

Even when used for a chatbot’s main purpose, chatting — which includes providing information and answering questions — ChatGPT can be tedious, confusing, and frustrating. You can count in it to be polite, though:

It can also provide entertainment. Witness some of the early prompts people gave it, like these:

To be sure, a human could have produced these just as admirably, if not quite as quickly. What makes these fun is knowing they were produced by a chatbot, which most of us don’t expect to be funny. At the same time, the result depends on the creativity of the human providing the prompt.

For me, ChatGPT’s entertainment function is limited and quickly becomes boring. Of course, I enjoy writing; I’d much rather spend my time writing than playing with a chatbot. Others undoubtedly feel the opposite.

What comes next?

However you feel about ChatGPT, its entertainment value is likely to be short-lived. Certainly, the chatbot’s ability to entertain doesn’t represent a significant value to humanity.

Other functions will be more useful, and it may yet yield truly valuable, astonishing results. My husband, an avid follower of AI, reminds me that AI is still in its infancy, and it’s likely to surprise us in ways we can’t even conceive of yet — sooner than we think.

As a Flower Child, I’m not sure I’m ready for that. I’m not sure that anyone is. I often think about the technological advances that have happened during my life so far and wonder what’s to come in 10 or 20 years that we can’t imagine now. We all expected some kind of portable communication device, but who would have thought we’d carry little computers in our pockets? Or that social media would exist, let alone become a threat to democracy? What if Stephen Hawking was right when he warned that AI’s emergence will be the “worst event in the history of our civilization”?

I don’t have the answers, anymore than anyone does.

But it’s good to know that, at least for now, I can’t be replaced by a chatbot.

* A chatbot is a computer program that uses AI and natural language processing to understand and respond to questions, simulating human conversation. GPT, which stands for “generative pre-trained transformer,” is a specific chatbot language; pre-trained means that the AI has learned from lots of internet data.

What do you think about AI, and about chatbots? Have you tried ChatGPT? Let me know in the comments!

ChatGPT, and all other AI innovations so far, including Alexa and Dall-E, can surprise us with their parlor tricks. And those tricks are impressive, especially when it comes to natural language processing. But they are still some distance away from "understanding." There's a brilliant thought experiment called the Chinese Room, by the philosopher John Searle (https://plato.stanford.edu/entries/chinese-room/) that helps to explain why.

It is worth considering what that argument means. In a nutshell, it suggests that operating a syntax does not generate semantics, at least not by itself. Or stated a bit more plainly, a tool cannot understand the problem to which it is applied.

I bet if you ask ChatGPT to "explain itself" or to even explain one of it's assignments, it will fail to express that it has any understanding. Like the "Chinese Room," it can operate a language syntax, and augmented with a searchable data store (like the Internet), it can "compile" a readable text that YOU can understand. But, at least at the present time, ChatGPT cannot understand its own utterances, and less so, itself.

(A corollary: simply passing the Turing Test does not guarantee that the "device" understands, even if it has intelligently found, translated, and expressed symbols.)

But given enough evolutionary pressure, sensory capabilities, and processing abilities it may be possible that "understanding" capabilities will *emerge* as a consequence. But it is not likely to be "built-in" to the device via programming or modeling or data. "Emergence" seems to be how a level of "semantic intelligence" we witness in some living organisms, especially birds, mammals, and octopuses has happened: it has emerged as a consequence of the neural capabilities in the organism.

So, "overall," yes, for now anyway, ChatCPT will not replace you. Mostly.

I’m finding that ChatGPT is good for outlining topics, like for my newsletter. It’s a fascinating starting point. It can be extremely bland, but the Bible story was great.

It reminds me of a story I read in grade school, so the 70s. One of a group of kids had a dad who was a computer scientist, and the dad created a computer that could write papers. The kids just had to input their textbooks, and once they did, the computer wrote the papers for them. But they realized that in the process of inputting the info, they learned the subjects themselves. Pretty similar to what’s happening with A.I. these days. You need to know what to ask.